LG301: .Net Core Linux

(un)Distributed Software

Conways Law

"Any organization that designs a system (defined broadly) will produce a design whose structure is a copy of the organization's communication structure."

Melvin E. Conway

Having mentioned him on the previous page, I’ve had Melvin Conway on my mind recently. As I sit here preparing to explain what I’m building, how I came to this solution, and why it’s a practical approach, I find myself wondering if there is something in this?

This may get a little weird but bear with me...

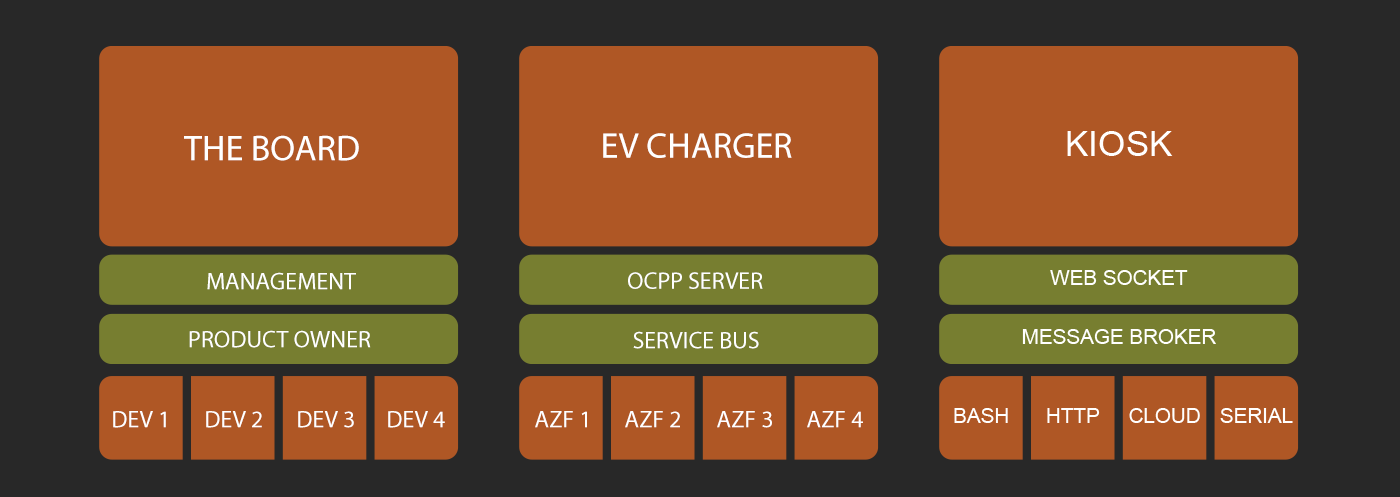

Above are three diagrams, the one on the left represents The Team at work, the one in the middle The Cloud System the team are building, and the one on the right The Linux Application I’m developing to run this device.

Here is a brief explanation of each of them.

The Team

The Board expects information, getting information to The Board is ultimately the function of The Team. The Board only communicates with Management and has no concept of how the information is generated.

The Management delivers the information to The Board that they are given by The Product Owner, they relay back to the Product Owner how the information was received by The Board and pass any requests from The Board back to The Product Owner. They must be able to communicate successfully with both The Board and The Product Owner.

The Product Owner understands the entire system, they communicate with Management and organise the tasks required from the Devs to produce the information The Board expects. Everything goes through The Product Owner.

The Devs get tasks from the Product Owner, they complete the tasks and communicate them back to the Product Owner this may generate more tasks for them or other Devs, they do not know what the other Devs are doing they only concern themselves with the tasks they complete.

The Cloud System

The Charger expects information, getting information to The Charger is ultimately the function of the entire system. The Charger only communicate with The OCPP Server and has no concept of how the information is generated.

The OCPP Server delivers the information to The Charger that they are given by The Service Bus, they relay to The Service Bus how that information was received by The Charger and pass any requests from the Charger back to the Service Bus. They must be able to communicate successfully with both The Charger and The Service Bus.

The Service Bus understands the entire system, they communicate with The OCPP Server and organise the tasks required from The Azure Functions to produce the information the The Charger expects. Everything goes through The Service Bus.

The Azure Functions get tasks from the Service Bus, they complete the tasks and communicate them back to the Service Bus this may generate more tasks for them or other Functions, they do not know what the other Functions are doing they only concern themselves with the tasks they complete.

The Linux Application

The Kiosk expects information, getting information to the Kiosk is ultimately the function of the entire Application. The Kiosk only communicates with The WebSockets and has no concept of how the information was generated.

The WebSockets delivers the information to the Kiosk that they are given by The Broker, they relay to the Broker how the information was received by The Kiosk and pass any requests from the Kiosk back to The Broker. They must be able to communicate successfully with both The Kiosk and The Broker.

The Broker understands the entire system, they communicate with The Web-Sockets and organise the tasks required from the Background Services to produce the information The Board expects. Everything goes through The Broker.

The Background Services get tasks from The Broker, they complete the tasks and communicate them back to The Broker this may generate more tasks for them or other Background Services, they do not know what the other Background Services are doing they only concern themselves with the tasks they complete.

A little fun… but worth a couple of questions...

Did the structure of the team influence the structure of the cloud system? No, I doubt it, but it does help to successfully deliver it… making it more likely to be delivered?

Did the structure of the Cloud System influence the structure of the Linux Application? Absolutely, in the last year the Cloud System has been poked and prodded by partners and due diligence and looks like it will successfully scale. Queuing commands on Service Bus Topics and Queues for Azure Functions has been the foundation that pins it all together.

I would never have considered the use of Concurrent Queues in a Singleton that distributed tasks to Background Services that run, in isolation, if I hadn’t designed the distributed system.

The Cloud System is proving to be a successful model for processing millions of requests, all be it with immense processing power at its disposal. At first glance, the Linux Application seems to be an effective way to deliver hundreds of requests on the simplest of computers.

We are a long way from the Super Loop of my first module, when coincidently, I was working alone. The Application is far more manageable, organised, and efficient… but as with the development team in comparison to a lone wolf developer… our overheads are greater.

Inside the Application

The items in the diagram above, really are all there is to it… the code is in the repo link below, I’ve added annotations to the files I link to below, it's currently only around 2000 lines of executable code, but imagine managing that in a single loop.

Kiosk

Having discussed his work in facilitating physical screen arrays, I worked briefly with Professor Nicolai Marquardt at UCL on a Study Proposal suggesting an interface (coincidently based on UWP) that could work across multiple devices, using a variety of inputs. The system used a Broker and Web-Sockets to send commands to HTML Pages through JavaScript.

The separation of display from the application, the .Net Application doesn’t need to contain the Kiosk, the Kiosk could be on any hardware, or on the local network, it just needs the IP address of the .Net Application so it can receive messages from the WebSockets.

1 HTML page, 1 Stylesheet and 1 JavaScript file… I’ve used jQuery because I’ve been around awhile, but React or View would work, opening up access to an army of talented developers, sitting happily at their MacBooks, who build beautiful user experiences.

640 x 480 perfect pixels completely under my control, this is beautifully simple.

WebSocket

The middleware I wrote in the previous module is used here unamended, this seems like validation but as we illustrated above, it’s not clever, it just relays messages!

Broker

A Singleton Class, available to all threads in the application, initiated and added to the Pipeline in Startup.cs, made available to everything else through Dependency Injection.

It has three main tasks, queueing messages for Background Services, passing queued messages to Background Service and using application logic to route messages to queues and the WebSocket.

public async Task RouteMessage(FMessage message)

{

if (message.Payload.ContainsKey("colour"))

{

FMessage m1 = message.Clone();

FMessage m2 = message.Clone();

m1.TargetID = (int)FTargets.Socket;

await QueueMessage(m1);

m2.TargetID = (int)FTargets.Serial;

await QueueMessage(m2);

}

}

In the example above, The Broker is asked to route a Message by a Background Service, the payload contains the key “colour”.

The Broker knows that the Kiosk and Serial Background Service care about "colour" messages, so it creates two new messages and drops them in the queues for them. That’s it.

Background Services

Background Services are initiated in Startup.cs and run independently of anything else. They are passed access to the Broker through Dependency Injection during initiation. We currently have four services, three of which are implemented.

Serial - Two threads, one to receive Serial messages from the Arduino, one to send them to it.

Bash - Single thread to send BASH commands to the underlying Linux System. This enables us to launch the browser in kiosk mode, and turn on and off services when we change mode.

HTTP - Two threads one watches the Broker’s Queue for messages to send to external API’s the other GETs info at timed intervals and passes it to the Broker.

Cloud - This will make a connection with the IoT Server we built in the last module.

A Cohesive Single Source Of Truth

The first thing the Kiosk does, after opening the WebSocket connection, is ask the Broker for the current Mode. The Kiosk can’t set or change the Mode because, as we discussed earlier, Mode has a physical state, Mode is connected to a 70-year-old lever!

socket = new WebSocket(uri);

socket.onopen = function (event) {

console.log("opened connection to " + uri);

// If we've connected send a message to the Broker to get the Twin...

socket.send("twin,get");

};

This command is called twin, currently, it just returns the mode but the name hints at where I’m going with this… to what David Gelernter called Mirror World back in 1991, and what Unity referred to as 'A Cohesive Single Source Of Truth'.

Look in the Broker and you’ll see there are a few random fields storing information that represents the device state. We are also loading in users’ playlists and need to keep track of what’s playing… We need a digital file that represents the current state of the device, we need a Digital Twin.

The Broker is the correct place for the Twin as it needs to understand the device to route messages and can share the Twin with the entire system. I have been using Digital Twins to represent physical devices (EV Chargers) in my day job, it’s hard to imagine a case in IoT where the inclusion of the virtual object wouldn’t be useful.

Isn’t that the truth!